秦农序

数据简化DataSimp导读:前乔治亚理工学院交互计算学院教授、现 谷歌AI科学家雅各布爱森斯坦(JacobEisenstein)博士在麻省理工学院出版社(MIT)发表力作Introductionto Natural Language Processing《自然语言处理介绍》。本文概述并选译各章首段。公号对话框发送“NLP导论”获取中英双语73k字3图24页PDF。内容通俗易懂,欢迎学习分享。

关键词:自然语言处理,Natural Language Processing (NLP),雅各布爱森斯坦(Jacob Eisenstein),博士。

目录

秦农序

I.自然语言处理导论Dr 雅各布 爱森斯坦(Jacob Eisenstein)(63k 字)

A. 《自然语言处理导论》内容概述

B. 《自然语言处理导论》章首译照

目录(Contents) 和前言(Preface)

第一章介绍(Introduction)

第一部分学习(Learning)

第二部分序列和树(Sequences and trees)

第三部分意义(Meaning)

第四部分应用(Applications)

II.雅各布 爱森斯坦(Jacob Eisenstein) 博士简历(6k 字)

A. 雅各布 爱森斯坦(JacobEisenstein) 中文简历

研究

教学

忠告

专业服务

B. 雅各布 爱森斯坦(JacobEisenstein) 英文简历

素材(5b 字)

秦农跋

自然语言处理导论Dr雅各布爱森斯坦(Jacob Eisenstein)

秦陇纪,科学Sciences20191212Thu

2019年10月,麻省理工学院出版社(MIT)线上发行了 雅各布爱森斯坦(Jacob Eisenstein)博士的Introductionto Natural Language Processing《自然语言处理导论》。该书内容由Contents(目录)、Preface(前言)、Introduction(介绍)、Learning(学习)、Sequences and trees(序列和树)、Meaning(意义)和Applications(应用)七部分组成;主体是后面4部分19章:自然语言处理中监督与无监等学习问题,序列与解析树等自然语言的建模方式,语篇语义的理解,以及这些技术在信息抽取、机器翻译和文本生成等具体任务中的应用。最新版PDF开放书587页整本在GitHub开放获取,是了解最新自然语言处理进展不可多得的教材。作者 雅各布爱森斯坦(Jacob Eisenstein)博士是一名 谷歌人工智能(Google AI)研究科学家,之前在 乔治亚理工学院的交互计算学院任教。 II篇是 中英对照简历。

他的个人网页https://jacobeisenstein.github.io/

教材开放地址https://github.com/jacobeisenstein/gt-nlp-class/tree/master/notes

或https://share.weiyun.com/U0QC2eCa

或https://pan.baidu.com/s/1NA8tglYGlA2VLT3FkgTm5A 提取码td74

A.《自然语言处理导论》内容概述

雅各布(Jacob)的新书是本自然语言处理领域教科书,提供关于自然语言处理方法的技术观点,用于 构建理解、生成和操作人类语言的计算机软件。它强调了当代的数据驱动方法,侧重于 监督和非监督机器学习的技术。第一部分通过构建一组贯穿全书的工具,并将它们应用于 基于单词的文本分析,为机器学习奠定了基础。第二部分介绍了 语言的结构化表示,包括 序列、树和图。第三部分探讨了不同的 语言意义表示和分析方法,从 形式逻辑到 神经词嵌入。最后一节详细介绍了自然语言处理的三种变革性应用: 信息提取、机器翻译和文本生成。最后的练习包括 纸笔分析和 软件实现。

作者在前言说,本文综合和提炼了广泛和多样的研究文献,将当代机器学习技术与该领域的语言和计算基础联系起来。它适合用于高等本科和研究生水平的课程,并作为软件工程师和数据科学家的参考。读者应该有计算机编程和大学水平的数学背景。在掌握材料后,学生将具备建立和分析新的自然语言处理系统的技术技能,并了解该领域的最新研究。 请看B的本书前言中文对照。

B.《自然语言处理导论》章首译照

本中英对照译文,节选全部目录、各章页码和首段部分。这本书的Learning(学习)、Sequences and trees(序列和树)、Meaning(意义)和Applications(应用)四个主要章节循序渐进:

学习:这一章节介绍了一套 机器学习工具,它也是整本教科书对不同问题建模的基础。由于重点在于介绍机器学习,因此我们使用的语言任务都非常简单,即以词袋文本分类为模型示例。第四章介绍了一些更具语言意义的文本分类应用。

序列与树:此章节将自然语言作为结构化的数据进行处理,它描述了语言用序列和树进行表示的方法,以及这些表示所添加的限制。第9 章介绍了 有限状态自动机(finite state automata)。

语义:本章从广泛的角度看待基于 文本表达和 计算语义的努力,包括形式逻辑和神经词嵌入等方面。

应用:末章介绍了三种自然语言处理中最重要的应用: 信息抽取、 机器翻译和 文本生成。我们不仅将了解使用前面章节技术所构建的知名系统,同时还会理解神经网络注意力机制等前沿问题。

目录(Contents)和前言(Preface)

Natural Language Processing

Jacob Eisenstein

November 13, 2018

第1页(PDF第3页)目录

Contents

Contents 1

Preface i

Background . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .i

How to use this book . . . . . . . . . . . . . . . . . . . . . . . . . . .ii

1 Introduction 1

1.1 Natural language processing and its neighbors . . . . . . . . . . . .. . . . . 1

1.2 Three themes in natural language processing . . . . . . . . . . . . .. . . . . 6

1.2.1 Learning and knowledge . . . . . . . . . . . . . . . . . . . . . . .. . 6

1.2.2 Search and learning . . . . . . . . . . . . . . . . . . . . . . . 7

1.2.3 Relational, compositional, and distributional perspectives . . . . .. 9

I Learning 11

2 Linear text classification 13

2.1 The bag of words . . . . . . . . . . . . . . . . . . . . . . . . . . .. . 13

2.2 Nave Bayes . . . . . . . . . . . . . . . . . . . . . . . . . . . . .. . . 17

2.2.1 Types and tokens . . . . . . . . . . . . . . . . . . . . . . . . .19

2.2.2 Prediction . . . . . . . . . . . . . . . . . . . . . . . . . . . . .20

2.2.3 Estimation . . . . . . . . . . . . . . . . . . . . . . . . . . . . .21

2.2.4 Smoothing . . . . . . . . . . . . . . . . . . . . . . . . . . . . .22

2.2.5 Setting hyperparameters . . . . . . . . . . . . . . . . . . . . . .. . . . 23

2.3 Discriminative learning . . . . . . . . . . . . . . . . . . . . . . .. . 24

2.3.1 Perceptron . . . . . . . . . . . . . . . . . . . . . . . . . . . . .25

2.3.2 Averaged perceptron . . . . . . . . . . . . . . . . . . . . . . . 27

2.4 Loss functions and large-margin classification . . . . . . . . . . . .. . . . . 27

2.4.1 Online large margin classification . . . . . . . . . . . . . . . . .. . . 30

2.4.2 *Derivation of the online support vector machine . . . . . . . . . .. 32

2.5 Logistic regression . . . . . . . . . . . . . . . . . . . . . . . . .. . . 35

2.5.1 Regularization . . . . . . . . . . . . . . . . . . . . . . . . . .36

2.5.2 Gradients . . . . . . . . . . . . . . . . . . . . . . . . . . . . .37

2.6 Optimization . . . . . . . . . . . . . . . . . . . . . . . . . . . . .. . . 37

2.6.1 Batch optimization . . . . . . . . . . . . . . . . . . . . . . . .38

2.6.2 Online optimization . . . . . . . . . . . . . . . . . . . . . . . 39

2.7 *Additional topics in classification . . . . . . . . . . . . . . . . .. . . . . . . 41

2.7.1 Feature selection by regularization . . . . . . . . . . . . . . . .. . . . 41

2.7.2 Other views of logistic regression . . . . . . . . . . . . . . . . .. . . . 41

2.8 Summary of learning algorithms . . . . . . . . . . . . . . . . . . . .. . . . . 43

3 Nonlinear classification 47

3.1 Feedforward neural networks . . . . . . . . . . . . . . . . . . . . .. 48

3.2 Designing neural networks . . . . . . . . . . . . . . . . . . . . . .. 50

3.2.1 Activation functions . . . . . . . . . . . . . . . . . . . . . . .50

3.2.2 Network structure . . . . . . . . . . . . . . . . . . . . . . . . 51

3.2.3 Outputs and loss functions . . . . . . . . . . . . . . . . . . . . .. . . 52

3.2.4 Inputs and lookup layers . . . . . . . . . . . . . . . . . . . . . .. . . 53

3.3 Learning neural networks . . . . . . . . . . . . . . . . . . . . . . .. 53

3.3.1 Backpropagation . . . . . . . . . . . . . . . . . . . . . . . . . 55

3.3.2 Regularization and dropout . . . . . . . . . . . . . . . . . . . . .. . . 57

3.3.3 *Learning theory . . . . . . . . . . . . . . . . . . . . . . . . .58

3.3.4 Tricks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .. 59

3.4 Convolutional neural networks . . . . . . . . . . . . . . . . . . . .. . . . . . 62

4 Linguistic applications of classification 69

4.1 Sentiment and opinion analysis . . . . . . . . . . . . . . . . . . . .. . . . . . 69

4.1.1 Related problems . . . . . . . . . . . . . . . . . . . . . . . . .70

4.1.2 Alternative approaches to sentiment analysis . . . . . . . . . . . .. . 72

4.2 Word sense disambiguation . . . . . . . . . . . . . . . . . . . . . .. 73

4.2.1 How many word senses? . . . . . . . . . . . . . . . . . . . . . . .. . 74

4.2.2 Word sense disambiguation as classification . . . . . . . . . . . .. . 75

4.3 Design decisions for text classification . . . . . . . . . . . . . . .. 原由网. . . . . . 76

4.3.1 What is a word? . . . . . . . . . . . . . . . . . . . . . . . . . .76

4.3.2 How many words? . . . . . . . . . . . . . . . . . . . . . . . . 79

4.3.3 Count or binary? . . . . . . . . . . . . . . . . . . . . . . . . .80

4.4 Evaluating classifiers . . . . . . . . . . . . . . . . . . . . . . . .. . . 80

4.4.1 Precision, recall, and F -MEASURE . . . . . . . . . . . . . . .. . . . . 81

4.4.2 Threshold-free metrics . . . . . . . . . . . . . . . . . . . . . .83

4.4.3 Classifier comparison and statistical significance . . . . . . . . .. . . 84

4.4.4 *Multiple comparisons . . . . . . . . . . . . . . . . . . . . . . 87

4.5 Building datasets . . . . . . . . . . . . . . . . . . . . . . . . . .. . . 88

4.5.1 Metadata as labels . . . . . . . . . . . . . . . . . . . . . . . .88

4.5.2 Labeling data . . . . . . . . . . . . . . . . . . . . . . . . . . .88

5 Learning without supervision 95

5.1 Unsupervised learning . . . . . . . . . . . . . . . . . . . . . . . .. . 95

5.1.1 K-means clustering . . . . . . . . . . . . . . . . . . . . . . . 96

5.1.2 Expectation-Maximization (EM) . . . . . . . . . . . . . . . . . . .. . 98

5.1.3 EM as an optimization algorithm . . . . . . . . . . . . . . . . . .. . . 102

5.1.4 How many clusters? . . . . . . . . . . . . . . . . . . . . . . . 103

5.2 Applications of expectation-maximization . . . . . . . . . . . . . . .. . . . . 104

5.2.1 Word sense induction . . . . . . . . . . . . . . . . . . . . . . 104

5.2.2 Semi-supervised learning . . . . . . . . . . . . . . . . . . . . . .. . . 105

5.2.3 Multi-component modeling . . . . . . . . . . . . . . . . . . . . . .. . 106

5.3 Semi-supervised learning . . . . . . . . . . . . . . . . . . . . . . .. 107

5.3.1 Multi-view learning . . . . . . . . . . . . . . . . . . . . . . .108

5.3.2 Graph-based algorithms . . . . . . . . . . . . . . . . . . . . . . .. . . 109

5.4 Domain adaptation . . . . . . . . . . . . . . . . . . . . . . . . . .. . 110

5.4.1 Supervised domain adaptation . . . . . . . . . . . . . . . . . . . .. . 111

5.4.2 Unsupervised domain adaptation . . . . . . . . . . . . . . . . . . .. 112

5.5 *Other approaches to learning with latent variables . . . . . . . . .. . . . . 114

5.5.1 Sampling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .115

5.5.2 Spectral learning . . . . . . . . . . . . . . . . . . . . . . . . .117

II Sequences and trees 123

6 Language models 125

6.1 N-gram language models . . . . . . . . . . . . . . . . . . . . . . . .126

6.2 Smoothing and discounting . . . . . . . . . . . . . . . . . . . . . .. 129

6.2.1 Smoothing . . . . . . . . . . . . . . . . . . . . . . . . . . . . .129

6.2.2 Discounting and backoff . . . . . . . . . . . . . . . . . . . . . .. . . . 130

6.2.3 *Interpolation . . . . . . . . . . . . . . . . . . . . . . . . . . .131

6.2.4 *Kneser-Ney smoothing . . . . . . . . . . . . . . . . . . . . . . .. . . 133

6.3 Recurrent neural network language models . . . . . . . . . . . . . . .. . . . 133

6.3.1 Backpropagation through time . . . . . . . . . . . . . . . . . . . .. . 136

6.3.2 Hyperparameters . . . . . . . . . . . . . . . . . . . . . . . . .137

6.3.3 Gated recurrent neural networks . . . . . . . . . . . . . . . . . .. . . 137

6.4 Evaluating language models . . . . . . . . . . . . . . . . . . . . . .. 139

6.4.1 Held-out likelihood . . . . . . . . . . . . . . . . . . . . . . .139

6.4.2 Perplexity . . . . . . . . . . . . . . . . . . . . . . . . . . . . .140

6.5 Out-of-vocabulary words . . . . . . . . . . . . . . . . . . . . . . .. 141

7 Sequence labeling 145

7.1 Sequence labeling as classification . . . . . . . . . . . . . . . . .. . . . . . . 145

7.2 Sequence labeling as structure prediction . . . . . . . . . . . . . .. . . . . . 147

7.3 The Viterbi algorithm . . . . . . . . . . . . . . . . . . . . . . . .. . . 149

7.3.1 Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .152

7.3.2 Higher-order features . . . . . . . . . . . . . . . . . . . . . .153

7.4 Hidden Markov Models . . . . . . . . . . . . . . . . . . . . . . . . .153

7.4.1 Estimation . . . . . . . . . . . . . . . . . . . . . . . . . . . . .155

7.4.2 Inference . . . . . . . . . . . . . . . . . . . . . . . . . . . . .. 155

7.5 Discriminative sequence labeling with features . . . . . . . . . . . .. . . . . 157

7.5.1 Structured perceptron . . . . . . . . . . . . . . . . . . . . . .160

7.5.2 Structured support vector machines . . . . . . . . . . . . . . . . .. . 160

7.5.3 Conditional random fields . . . . . . . . . . . . . . . . . . . . .. . . . 162

7.6 Neural sequence labeling . . . . . . . . . . . . . . . . . . . . . . .. . 167

7.6.1 Recurrent neural networks . . . . . . . . . . . . . . . . . . . . .. . . 167

7.6.2 Character-level models . . . . . . . . . . . . . . . . . . . . . .169

7.6.3 Convolutional Neural Networks for Sequence Labeling . . . . . . . .170

7.7 *Unsupervised sequence labeling . . . . . . . . . . . . . . . . . . .. . . . . . 170

7.7.1 Linear dynamical systems . . . . . . . . . . . . . . . . . . . . . .. . . 172

7.7.2 Alternative unsupervised learning methods . . . . . . . . . . . . .. 172

7.7.3 Semiring notation and the generalized viterbi algorithm . . . . . .. 172

8 Applications of sequence labeling 175

8.1 Part-of-speech tagging . . . . . . . . . . . . . . . . . . . . . . . .. . 175

8.1.1 Parts-of-Speech . . . . . . . . . . . . . . . . . . . . . . . . . .176

8.1.2 Accurate part-of-speech tagging . . . . . . . . . . . . . . . . . .. . . 180

8.2 Morphosyntactic Attributes . . . . . . . . . . . . . . . . . . . . . .. 182

8.3 Named Entity Recognition . . . . . . . . . . . . . . . . . . . . . . .. 183

8.4 Tokenization . . . . . . . . . . . . . . . . . . . . . . . . . . . . .. . . 185

8.5 Code switching . . . . . . . . . . . . . . . . . . . . . . . . . . . .. . 186

8.6 Dialogue acts . . . . . . . . . . . . . . . . . . . . . . . . . . . .. . . 187

9 Formal language theory 191

9.1 Regular languages . . . . . . . . . . . . . . . . . . . . . . . . . .. . 192

9.1.1 Finite state acceptors . . . . . . . . . . . . . . . . . . . . . . .193

9.1.2 Morphology as a regular language . . . . . . . . . . . . . . . . . .. . 194

9.1.3 Weighted finite state acceptors . . . . . . . . . . . . . . . . . .. . . . 196

9.1.4 Finite state transducers . . . . . . . . . . . . . . . . . . . . . .. . . . 201

9.1.5 *Learning weighted finite state automata . . . . . . . . . . . . . .. . 206

9.2 Context-free languages . . . . . . . . . . . . . . . . . . . . . . . .. . 207

9.2.1 Context-free grammars . . . . . . . . . . . . . . . . . . . . . . .. . . 208

9.2.2 Natural language syntax as a context-free language . . . . . . . . .. 211

9.2.3 A phrase-structure grammar for English . . . . . . . . . . . . . . .. 213

9.2.4 Grammatical ambiguity . . . . . . . . . . . . . . . . . . . . . . .. . . 218

9.3 *Mildly context-sensitive languages . . . . . . . . . . . . . . . . .. . . . . . 218

9.3.1 Context-sensitive phenomena in natural language . . . . . . . . . .. 219

9.3.2 Combinatory categorial grammar . . . . . . . . . . . . . . . . . . .. 220

10 Context-free parsing 225

10.1 Deterministic bottom-up parsing . . . . . . . . . . . . . . . . . . .. . . . . . 226

10.1.1 Recovering the parse tree . . . . . . . . . . . . . . . . . . . . .. . . . 227

10.1.2 Non-binary productions . . . . . . . . . . . . . . . . . . . . . .. . . . 227

10.1.3 Complexity . . . . . . . . . . . . . . . . . . . . . . . . . . . .229

10.2 Ambiguity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .. . . 229

10.2.1 Parser evaluation . . . . . . . . . . . . . . . . . . . . . . . . .230

10.2.2 Local solutions . . . . . . . . . . . . . . . . . . . . . . . . . .231

10.3 Weighted Context-Free Grammars . . . . . . . . . . . . . . . . . . .. . . . . 232

10.3.1 Parsing with weighted context-free grammars . . . . . . . . . . . .. 234

10.3.2 Probabilistic context-free grammars . . . . . . . . . . . . . . . .. . . 235

10.3.3 *Semiring weighted context-free grammars . . . . . . . . . . . . .. . 237

10.4 Learning weighted context-free grammars . . . . . . . . . . . . . . .. . . . . 238

10.4.1 Probabilistic context-free grammars . . . . . . . . . . . . . . . .. . . 238

10.4.2 Feature-based parsing . . . . . . . . . . . . . . . . . . . . . .239

10.4.3 *Conditional random field parsing . . . . . . . . . . . . . . . . .. . . 240

10.4.4 Neural context-free grammars . . . . . . . . . . . . . . . . . . .. . . 242

10.5 Grammar refinement . . . . . . . . . . . . . . . . . . . . . . . . .. . 242

10.5.1 Parent annotations and other tree transformations . . . . . . . . .. . 243

10.5.2 Lexicalized context-free grammars . . . . . . . . . . . . . . . . .. . . 244

10.5.3 *Refinement grammars . . . . . . . . . . . . . . . . . . . . . . .. . . 248

10.6 Beyond context-free parsing . . . . . . . . . . . . . . . . . . . . .. . 249

10.6.1 Reranking . . . . . . . . . . . . . . . . . . . . . . . . . . . . .250

10.6.2 Transition-based parsing . . . . . . . . . . . . . . . . . . . . .. . . . . 251

11 Dependency parsing 257

11.1 Dependency grammar . . . . . . . . . . . . . . . . . . . . . . . . .. 257

11.1.1 Heads and dependents . . . . . . . . . . . . . . . . . . . . . .258

11.1.2 Labeled dependencies . . . . . . . . . . . . . . . . . . . . . . 259

11.1.3 Dependency subtrees and constituents . . . . . . . . . . . . . . ..原由网 . 260

11.2 Graph-based dependency parsing . . . . . . . . . . . . . . . . . . .. . . . . 262

11.2.1 Graph-based parsing algorithms . . . . . . . . . . . . . . . . . .. . . 264

11.2.2 Computing scores for dependency arcs . . . . . . . . . . . . . . .. . 265

11.2.3 Learning . . . . . . . . . . . . . . . . . . . . . . . . . . . . .. 267

11.3 Transition-based dependency parsing . . . . . . . . . . . . . . . . .. . . . . 268

11.3.1 Transition systems for dependency parsing . . . . . . . . . . . . .. . 269

11.3.2 Scoring functions for transition-based parsers . . . . . . . . . .. . . 273

11.3.3 Learning to parse . . . . . . . . . . . . . . . . . . . . . . . . .274

11.4 Applications . . . . . . . . . . . . . . . . . . . . . . . . . . . .. . . . 277

III Meaning 283

12 Logical semantics 285

12.1 Meaning and denotation . . . . . . . . . . . . . . . . . . . . . . .. . 286

12.2 Logical representations of meaning . . . . . . . . . . . . . . . . .. . . . . . . 287

12.2.1 Propositional logic . . . . . . . . . . . . . . . . . . . . . . . .287

12.2.2 First-order logic . . . . . . . . . . . . . . . . . . . . . . . . .. 288

12.3 Semantic parsing and the lambda calculus . . . . . . . . . . . . . .. . . . . . 291

12.3.1 The lambda calculus . . . . . . . . . . . . . . . . . . . . . . .292

12.3.2 Quantification . . . . . . . . . . . . . . . . . . . . . . . . . .. 293

12.4 Learning semantic parsers . . . . . . . . . . . . . . . . . . . . . .. . 296

12.4.1 Learning from derivations . . . . . . . . . . . . . . . . . . . . .. . . . 297

12.4.2 Learning from logical forms . . . . . . . . . . . . . . . . . . . .. . . . 299

12.4.3 Learning from denotations . . . . . . . . . . . . . . . . . . . . .. . . 301

13 Predicate-argument semantics 305

13.1 Semantic roles . . . . . . . . . . . . . . . . . . . . . . . . . . .. . . . 307

13.1.1 VerbNet . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .308

13.1.2 Proto-roles and PropBank . . . . . . . . . . . . . . . . . . . . .. . . . 309

13.1.3 FrameNet . . . . . . . . . . . . . . . . . . . . . . . . . . . . .310

13.2 Semantic role labeling . . . . . . . . . . . . . . . . . . . . . . .. . . 312

13.2.1 Semantic role labeling as classification . . . . . . . . . . . . .. . . . . 312

13.2.2 Semantic role labeling as constrained optimization . . . . . . . .. . 315

13.2.3 Neural semantic role labeling . . . . . . . . . . . . . . . . . . .. . . . 317

13.3 Abstract Meaning Representation . . . . . . . . . . . . . . . . . . .. . . . . . 318

13.3.1 AMR Parsing . . . . . . . . . . . . . . . . . . . . . . . . . . .321

14 Distributional and distributed semantics 325

14.1 The distributional hypothesis . . . . . . . . . . . . . . . . . . . .. . 325

14.2 Design decisions for word representations . . . . . . . . . . . . . .. . . . . . 327

14.2.1 Representation . . . . . . . . . . . . . . . . . . . . . . . . . .327

14.2.2 Context . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .. 328

14.2.3 Estimation . . . . . . . . . . . . . . . . . . . . . . . . . . . .. 329

14.3 Latent semantic analysis . . . . . . . . . . . . . . . . . . . . . .. . . 329

14.4 Brown clusters . . . . . . . . . . . . . . . . . . . . . . . . . . .. . . . 331

14.5 Neural word embeddings . . . . . . . . . . . . . . . . . . . . . . .. 334

14.5.1 Continuous bag-of-words (CBOW) . . . . . . . . . . . . . . . . . .. . 334

14.5.2 Skipgrams . . . . . . . . . . . . . . . . . . . . . . . . . . . . .335

14.5.3 Computational complexity . . . . . . . . . . . . . . . . . . . . .. . . 335

14.5.4 Word embeddings as matrix factorization . . . . . . . . . . . . . .. . 337

14.6 Evaluating word embeddings . . . . . . . . . . . . . . . . . . . . .. 338

14.6.1 Intrinsic evaluations . . . . . . . . . . . . . . . . . . . . . . .339

14.6.2 Extrinsic evaluations . . . . . . . . . . . . . . . . . . . . . . .339

14.6.3 Fairness and bias . . . . . . . . . . . . . . . . . . . . . . . . .340

14.7 Distributed representations beyond distributional statistics . . . .. . . . . . 341

14.7.1 Word-internal structure . . . . . . . . . . . . . . . . . . . . . .. . . . 341

14.7.2 Lexical semantic resources . . . . . . . . . . . . . . . . . . . .. . . . . 343

14.8 Distributed representations of multiword units . . . . . . . . . . .. . . . . . 344

14.8.1 Purely distributional methods . . . . . . . . . . . . . . . . . . .. . . 344

14.8.2 Distributional-compositional hybrids . . . . . . . . . . . . . . .. . . 345

14.8.3 Supervised compositional methods . . . . . . . . . . . . . . . . .. . 346

14.8.4 Hybrid distributed-symbolic representations . . . . . . . . . . . .. . 346

15 Reference Resolution 351

15.1 Forms of referring expressions . . . . . . . . . . . . . . . . . . .. . . . . . . 352

15.1.1 Pronouns . . . . . . . . . . . . . . . . . . . . . . . . . . . . .352

15.1.2 Proper Nouns . . . . . . . . . . . . . . . . . . . . . . . . . . .357

15.1.3 Nominals . . . . . . . . . . . . . . . . . . . . . . . . . . . . .357

15.2 Algorithms for coreference resolution . . . . . . . . . . . . . . . .. . . . . . 358

15.2.1 Mention-pair models . . . . . . . . . . . . . . . . . . . . . . .359

15.2.2 Mention-ranking models . . . . . . . . . . . . . . . . . . . . . .. . . 360

15.2.3 Transitive closure in mention-based models . . . . . . . . . . . .. . . 361

15.2.4 Entity-based models . . . . . . . . . . . . . . . . . . . . . . .362

15.3 Representations for coreference resolution . . . . . . . . . . . . .. . . . . . . 367

15.3.1 Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . .. 367

15.3.2 Distributed representations of mentions and entities . . . . . . .. . . 370

15.4 Evaluating coreference resolution . . . . . . . . . . . . . . . . . .. . . . . . . 373

16 Discourse 379

16.1 Segments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .. . . . 379

16.1.1 Topic segmentation . . . . . . . . . . . . . . . . . . . . . . . .380

16.1.2 Functional segmentation . . . . . . . . . . . . . . . . . . . . . .. . . . 381

16.2 Entities and reference . . . . . . . . . . . . . . . . . . . . . . .. . . . 381

16.2.1 Centering theory . . . . . . . . . . . . . . . . . . . . . . . . .382

16.2.2 The entity grid . . . . . . . . . . . . . . . . . . . . . . . . . .383

16.2.3 *Formal semantics beyond the sentence level . . . . . . . . . . . .. . 384

16.3 Relations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .. . . . 385

16.3.1 Shallow discourse relations . . . . . . . . . . . . . . . . . . . .. . . . 385

16.3.2 Hierarchical discourse relations . . . . . . . . . . . . . . . . .. . . . . 389

16.3.3 Argumentation . . . . . . . . . . . . . . . . . . . . . . . . . .392

16.3.4 Applications of discourse relations . . . . . . . . . . . . . . . .. . . . 393

IV Applications 401

17 Information extraction 403

17.1 Entities . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .. . . . . 405

17.1.1 Entity linking by learning to rank . . . . . . . . . . . . . . . .. . . . 406

17.1.2 Collective entity linking . . . . . . . . . . . . . . . . . . . . .. . . . . 408

17.1.3 *Pairwise ranking loss functions . . . . . . . . . . . . . . . . .. . . . 409

17.2 Relations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .. . . . 411

17.2.1 Pattern-based relation extraction . . . . . . . . . . . . . . . . .. . . . 412

17.2.2 Relation extraction as a classification task . . . . . . . . . . .. . . . . 413

17.2.3 Knowledge base population . . . . . . . . . . . . . . . . . . . . .. . . 416

17.2.4 Open information extraction . . . . . . . . . . . . . . . . . . . .. . . 419

17.3 Events . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .. . . . 420

17.4 Hedges, denials, and hypotheticals . . . . . . . . . . . . . . . . .. . . . . . . 422

17.5 Question answering and machine reading . . . . . . . . . . . . . . .. . . . . 424

17.5.1 Formal semantics . . . . . . . . . . . . . . . . . . . . . . . . .424

17.5.2 Machine reading . . . . . . . . . . . . . . . . . . . . . . . . .425

18 Machine translation 431

18.1 Machine translation as a task . . . . . . . . . . . . . . . . . . . .. . 431

18.1.1 Evaluating translations . . . . . . . . . . . . . . . . . . . . . .. . . . 433

18.1.2 Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .. 435

18.2 Statistical machine translation . . . . . . . . . . . . . . . . . . .. . . 436

18.2.1 Statistical translation modeling . . . . . . . . . . . . . . . . .. . . . . 437

18.2.2 Estimation . . . . . . . . . . . . . . . . . . . . . . . . . . . .. 438

18.2.3 Phrase-based translation . . . . . . . . . . . . . . . . . . . . .. . . . . 439

18.2.4 *Syntax-based translation . . . . . . . . . . . . . . . . . . . . .. . . . 441

18.3 Neural machine translation . . . . . . . . . . . . . . . . . . . . .. . 442

18.3.1 Neural attention . . . . . . . . . . . . . . . . . . . . . . . . .444

18.3.2 *Neural machine translation without recurrence . . . . . . . . . .. . 446

18.3.3 Out-of-vocabulary words . . . . . . . . . . . . . . . . . . . . . .. . . 448

18.4 Decoding . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .. . . . 449

18.5 Training towards the evaluation metric . . . . . . . . . . . . . . .. . . . . . 451

19 Text generation 457

19.1 Data-to-text generation . . . . . . . . . . . . . . . . . . . . . . .. . . 457

19.1.1 Latent data-to-text alignment . . . . . . . . . . . . . . . . . . .. . . . 459

19.1.2 Neural data-to-text generation . . . . . . . . . . . . . . . . . .. . . . 460

19.2 Text-to-text generation . . . . . . . . . . . . . . . . . . . . . . .. . . 464

19.2.1 Neural abstractive summarization . . . . . . . . . . . . . . . . .. . . 464

19.2.2 Sentence fusion for multi-document summarization . . . . . . . . .. 465

19.3 Dialogue . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .. . . . 466

19.3.1 Finite-state and agenda-based dialogue systems . . . . . . . . . .. . 467

19.3.2 Markov decision processes . . . . . . . . . . . . . . . . . . . . .. . . 468

19.3.3 Neural chatbots . . . . . . . . . . . . . . . . . . . . . . . . . .470

A Probability 475

A.1 Probabilities of event combinations . . . . . . . . . . . . . . . . .. . . . . . . 475

A.1.1 Probabilities of disjoint events . . . . . . . . . . . . . . . . . .. . . . 476

A.1.2 Law of total probability . . . . . . . . . . . . . . . . . . . . . .. . . . 477

A.2 Conditional probability and Bayes’ rule . . . . . . . . . . . . . . .. . . . . . 477

A.3 Independence . . . . . . . . . . . . . . . . . . . . . . . . . . . . .. . 479

A.4 Random variables . . . . . . . . . . . . . . . . . . . . . . . . . . .. . 480

A.5 Expectations . . . . . . . . . . . . . . . . . . . . . . . . . . . . .. . . 481

A.6 Modeling and estimation . . . . . . . . . . . . . . . . . . . . . . .. . 482

B Numerical optimization 485

B.1 Gradient descent . . . . . . . . . . . . . . . . . . . . . . . . . . .. . 486

B.2 Constrained optimization . . . . . . . . . . . . . . . . . . . . . . .. 486

B.3 Example: Passive-aggressive online learning . . . . . . . . . . . . .. . . . . 487

Bibliography 489

Under contract with MIT Press, shared under CC-BY-NC-ND license.

第i页(PDF第13页)前言

前言

本文的目标是关注自然语言处理的核心子集,该子集由学习和搜索的概念统一起来。一组紧凑的方法可以解决自然语言处理中的许多问题:

搜索。维特比(Viterbi),CKY,最小生成树,移位减少,整数线性规划,波束搜索。

学习。最大似然估计,逻辑回归,感知器,期望最大化,矩阵分解,反向传播。

本文介绍了这些方法的工作原理,以及如何将它们应用于广泛的任务:文档分类,词义消歧,词性标记,命名实体识别,解析,共指解析,关系提取,话语分析,语言建模和机器翻译。

背景

因为自然语言处理借鉴了许多不同的知识传统,所以几乎所有接触自然语言的人都会以一种或另一种方式感到准备不足。以下是预期的摘要,您可以在其中了解更多信息:

数学和机器学习。本书假设背景为多元演算和线性代数:向量,矩阵,导数和偏导数。您还应该熟悉概率和统计数据。附录A中对基本概率进行了综述,附录B中对数值优化进行了最小回顾。对于线性代数, 斯特朗(Strang)2016的在线课程和教科书提供了出色的综述。 迪森罗斯(Deisenroth)等2018目前正在准备一本关于机器学习数学的教科书,可以在网上找到草案.1有关概率建模和估计的介绍,请参见 詹姆士(James)等2013;对于同一材料的更高级和更全面的讨论,经典参考文献是 哈斯蒂(Hastie)等2009。

语言学。除了名词和动词之类的基本概念(您在英语语法研究中可能遇到过)之外,这本书不接受语言学的正式培训。在整个文本中,根据需要引入了语言学的思想,包括形态和语法(第9章),语义(第12和13章)和话语(第16章)的讨论。在以应用程序为重点的第4、8和18章中也出现了语言问题。 本德(Bender)2013为自然语言处理的学生提供了语言学的简短指南。我们鼓励您从此处开始,然后阅读更全面的入门教科书(例如, 阿克玛吉恩(Akmajian)等2010; 弗洛金(Fromkin)等2013)。

计算机科学。这本书针对的是计算机科学家,假定他们已参加了有关算法分析和复杂性理论的入门课程。特别是,您应该熟悉算法的时间和内存成本的渐近分析,以及动态编程的基础。 科门(Cormen)等人2009提供了有关算法的经典文章;有关计算理论的介绍,请参见 阿罗拉(Arora)和巴拉克(Barak)2009和 西普瑟(Sipser)2012。

如何使用这本书

介绍之后,教科书分为四个主要单元:

学习。本节建立了一组机器学习工具,这些工具将在其他各节中使用。因为重点放在机器学习上,所以文本表示和语言现象通常很简单:“单词袋(bag-of-words)”文本分类被视为模型示例。第4章介绍了一些基于单词的文本分析在语言上更有趣的应用程序。

序列和树。本节介绍将语言视为结构化现象的方法。它描述了序列和树表示形式以及它们促进的算法,以及这些表示形式所施加的限制。第9章介绍了有限状态自动机,并简要概述了英语语法的无上下文说明。

含义。本节从形式逻辑到神经词嵌入,广泛介绍了表示和计算文本含义的工作。它还包括与语义密切相关的两个主题:歧义引用的解析和多句话语结构的分析。

应用程序。最后一部分提供了有关自然语言处理的三个最突出应用的篇幅长度的处理:信息提取,机器翻译和文本生成。这些应用程序中的每一个都应具有自己的教科书长度处理方法(科恩(Koehn)2009年; 格里什曼(Grishman)2012年; 赖特(Reiter)和戴尔(Dale)2000年)。这里的各章介绍了一些使用本书较早建立的形式主义和方法的最著名的系统,同时介绍了诸如神经注意力的方法。

每章均包含一些高级材料,并标有星号。可以安全地省略此材料,而不会在以后引起误解。但是,即使没有这些高级部分,对于一个学期的课程而言,该文本也太长了,因此,教师将不得不在各章中进行选择。

第1-3章提供了将在整本书中使用的构造块,第4章介绍了语言技术实践的一些关键方面。语言模型(第6章),序列标记(第7章)和解析(第10和11章)是自然语言处理中的规范主题,而分布式词嵌入(第14章)已变得无处不在。在这些应用程序中,机器翻译(第18章)是最佳选择:比 信息提取更具凝聚力,比 文本生成更成熟。许多学生将从附录 A中的概率复习中受益。

_重点关注机器学习的课程应增加有关无监督学习的章节(第5章)。有关 谓词参数语义(第13章), 参考解析(第15章)和 文本生成(第19章)的章节尤其受到 机器学习(包括深度神经网络和学习搜索)的最新进展的影响。

_语言学课程应增加有关顺序标签(第8章),形式语言理论(第9章),语义(第12和13章)和话语(第16章)的应用的章节。

_对于一门具有更多应用重点的课程,我建议使用以下几章:序列标记的应用(第8章),谓词自变量语义(第13章),信息提取(第17章)和文本生成(第19章)。

致谢

一些同事,学生和朋友阅读了各自专业领域的章节初稿,包括约夫阿齐(Yoav Artzi),凯文杜(Kevin Duh),纪衡(Heng Ji),杰西李(Jessy Li),布伦丹奥康纳(Brendan O'Connor),尤瓦尔品特(Yuval Pinter),肖恩灵拉米雷斯(Shawn Ling Ramirez),内森施耐德(Nathan Schneider),帕梅拉夏皮罗(Pamela Shapiro),诺亚史密斯(Noah A. Smith),桑迪普索尼(Sandeep Soni)和卢克泽特尔默耶(Luke Zettlemoyer)。我还要感谢匿名审阅者,特别是 审阅者4,他们提供了逐行的详细编辑和建议。文本得益于与我的编辑玛丽鲁夫金李(Marie Lufkin Lee),凯文墨菲(Kevin Murphy,),肖恩灵拉米雷斯(Shawn Ling Ramirez)和邦妮韦伯(Bonnie Webber)的高层讨论。此外,许多学生、同事、朋友和家人在早期草稿中发现错误或推荐了关键参考文献。

其中包括:巴明巴蒂亚(Parminder Bhatia),金伯利卡拉斯(Kimberly Caras),蔡嘉豪(Jiahao Cai),贾斯汀陈(Justin Chen),罗道夫德尔蒙特(Rodolfo Delmonte),莫尔塔扎杜利亚瓦拉(Murtaza Dhuliawala),杜彦涛(Yantao Du),芭芭拉爱森斯坦(Barbara Eisenstein),路易斯CF里贝罗(Luiz CF Ribeiro),克里斯顾(Chris Gu),约书亚基林斯沃思(Joshua Killingsworth),乔纳森梅(Jonathan May),塔哈梅加尼(Taha Merghani),古斯莫诺德(Gus Monod),拉格文德拉穆拉利(Raghavendra Murali),奈迪什奈尔(Nidish Nair),布伦丹奥康纳(Brendan O'Connor),丹奥奈塔(Dan Oneata),布兰登派克(Brandon Peck),尤瓦尔品特(Yuval Pinter),内森施耐德(Nathan Schneider),沉建豪(Jianhao Shen),孙哲伟(Zhewei Sun),鲁宾徐(Rubin Tsui),阿什温坎纳帕卡姆文吉穆尔(Ashwin Cunnapakkam Vinjimur),丹尼弗朗德西奇(Denny Vrandecic),威廉杨王(William Yang Wang),克莱华盛顿(Clay Washington),伊山韦库(Ishan Waykul),杨敖博(Aobo Yang),泽维尔姚(Xavier Yao),张宇宇(Yuyu Zhang,)和几位 匿名评论员。克莱 华盛顿(Clay Washington)测试了一些编程练习,瓦伦古普塔(Varun Gupta)测试了一些书面练习。感谢凯文徐(Kelvin Xu)分享了图19.3的高分辨率版本。

这本书的大部分是我在佐治亚理工学院交互计算学院期间撰写的。我感谢学校对这个项目的支持,也感谢我的同事们在我的职业生涯开始时所给予的帮助和支持。我还要感谢(并致歉)佐治亚理工学院CS 4650和7650中的许多学生,他们遭受了早期文本的折磨。这本书 献给我父母。

Preface

The goal of this text is focus on a core subset of the natural languageprocessing, unified by the concepts of learning and search. A remarkable numberof problems in natural language processing can be solved by a compact set ofmethods:

Search. Viterbi, CKY, minimum spanning tree, shift-reduce, integer linearprogramming, beam search.

Learning. Maximum-likelihood estimation, logistic regression, perceptron,expectationmaximization, matrix factorization, backpropagation.

This text explains how these methods work, and how they can be applied toa wide range of tasks: document classification, word sense disambiguation,part-of-speech tagging, named entity recognition, parsing, coreferenceresolution, relation extraction, discourse analysis, language modeling, andmachine translation.

Background

Because natural language processing draws on many different intellectualtraditions, almost everyone who approaches it feels underprepared in one way oranother. Here is a summary of what is expected, and where you can learn more:

Mathematics and machine learning. The text assumes a backgroundin multivariate calculus and linear algebra: vectors, matrices, derivatives,and partial derivatives. You should also be familiar with probability andstatistics. A review of basic probability is found in Appendix A, and a minimalreview of numerical optimization is found in Appendix B. For linear algebra,the online course and textbook from Strang (2016) provide an excellent review.Deisenroth et al. (2018) are currently preparing a textbook on Mathematics forMachine Learning, a draft can be found online.1 For an introduction toprobabilistic modeling and estimation, see James et al. (2013); for a moreadvanced and comprehensive discussion of the same material, the classic referenceis Hastie et al. (2009).

Linguistics. This book assumes no formal training in linguistics, aside from elementaryconcepts likes nouns and verbs, which you have probably encountered in thestudy of English grammar. Ideas from linguistics are introduced throughout thetext as needed, including discussions of morphology and syntax (chapter 9),semantics (chapters 12 and 13), and discourse (chapter 16). Linguistic issuesalso arise in the application-focused chapters 4, 8, and 18. A short guide tolinguistics for students of natural language processing is offered by Bender(2013); you are encouraged to start there, and then pick up a morecomprehensive introductory textbook (e.g., Akmajian et al., 2010; Fromkin etal., 2013).

Computer science. The book is targeted at computer scientists, who are assumed to have takenintroductory courses on the analysis of algorithms and complexity theory. In particular,you should be familiar with asymptotic analysis of the time and memory costs ofalgorithms, and with the basics of dynamic programming. The classic text onalgorithms is offered by Cormen et al. (2009); for an introduction to thetheory of computation, see Arora and Barak (2009) and Sipser (2012).

How to use this book

After the introduction, the textbook is organized into four main units:

Learning. This section builds up a set of machine learning tools that will be used throughoutthe other sections. Because the focus is on machine learning, the textrepresentations and linguistic phenomena are mostly simple: “bag-of-words” textclassification is treated as a model example. Chapter 4 describes some of themore linguistically interesting applications of word-based text analysis.

Sequences and trees. This section introduces the treatment of language as astructured phenomena. It describes sequence and tree representations and thealgorithms that they facilitate, as well as the limitations that theserepresentations impose. Chapter 9 introduces finite state automata and brieflyoverviews a context-free account of English syntax.

Meaning. This section takes a broad view of efforts to represent and computemeaning from text, ranging from formal logic to neural word embeddings. It alsoincludes two topics that are closely related to semantics: resolution ofambiguous references, and analysis of multi-sentence discourse structure.

Applications. The final section offers chapter-length treatments on three of the mostprominent applications of natural language processing: information extraction,machine translation, and text generation. Each of these applications merits atextbook length treatment of its own (Koehn, 2009; Grishman, 2012; Reiter andDale, 2000); the chapters here explain some of the most well known systemsusing the formalisms and methods built up earlier in the book, whileintroducing methods such as neural attention.

Each chapter contains some advanced material, which is marked with anasterisk. This material can be safely omitted without causing misunderstandingslater on. But even without these advanced sections, the text is too long for asingle semester course, so instructors will have to pick and choose among thechapters.

Chapters 1-3 provide building blocks that will be used throughout thebook, and chapter 4 describes some critical aspects of the practice of languagetechnology. Language models (chapter 6), sequence labeling (chapter 7), andparsing (chapter 10 and 11) are canonical topics in natural languageprocessing, and distributed word embeddings (chapter 14) have becomeubiquitous. Of the applications, machine translation (chapter 18) is the bestchoice: it is more cohesive than information extraction, and more mature thantext generation. Many students will benefit from the review of probability inAppendix A.

_ A course focusing on machine learning should add the chapter onunsupervised learning (chapter 5). The chapters on predicate-argument semantics(chapter 13), reference resolution (chapter 15), and text generation (chapter19) are particularly influenced by recent progress in machine learning,including deep neural networks and learning to search.

_ A course with a more linguistic orientation should add the chapters onapplications of sequence labeling (chapter 8), formal language theory (chapter9), semantics (chapter 12 and 13), and discourse (chapter 16).

_ For a course with a more applied focus, I recommend the chapters onapplications of sequence labeling (chapter 8), predicate-argument semantics(chapter 13), information extraction (chapter 17), and text generation (chapter19).

Acknowledgments

Several colleagues, students, and friends read early drafts of chapters intheir areas of expertise, including Yoav Artzi, Kevin Duh, Heng Ji, Jessy Li,Brendan O’Connor, Yuval Pinter, Shawn Ling Ramirez, Nathan Schneider, PamelaShapiro, Noah A. Smith, Sandeep Soni, and Luke Zettlemoyer. I also thank theanonymous reviewers, particularly reviewer 4, who provided detailedline-by-line edits and suggestions. The text benefited from highlevel discussionswith my editor Marie Lufkin Lee, as well as Kevin Murphy, Shawn Ling Ramirez,and Bonnie Webber. In addition, there are many students, colleagues, friends, andfamily who found mistakes in early drafts, or who recommended key references.

These include: Parminder Bhatia, Kimberly Caras, Jiahao Cai, Justin Chen,Rodolfo Delmonte, Murtaza Dhuliawala, Yantao Du, Barbara Eisenstein, Luiz C. F.Ribeiro, Chris Gu, Joshua Killingsworth, Jonathan May, Taha Merghani, GusMonod, Raghavendra Murali, Nidish Nair, Brendan O’Connor, Dan Oneata, BrandonPeck, Yuval Pinter, Nathan Schneider, Jianhao Shen, Zhewei Sun, Rubin Tsui,Ashwin Cunnapakkam Vinjimur, Denny Vrandecic, William Yang Wang, ClayWashington, Ishan Waykul, Aobo Yang, Xavier Yao, Yuyu Zhang, and severalanonymous commenters. Clay Washington tested some of the programming exercises,and Varun Gupta tested some of the written exercises. Thanks to Kelvin Xu forsharing a high-resolution version of Figure 19.3.

Most of the book was written while I was at Georgia Tech’s School ofInteractive Computing. I thank the School for its support of this project, andI thank my colleagues there for their help and support at the beginning of myfaculty career. I also thank (and apologize to) the many students in GeorgiaTech’s CS 4650 and 7650 who suffered through early versions of the text. Thebook is dedicated to my parents.

第v页(PDF第17页)符号

符号

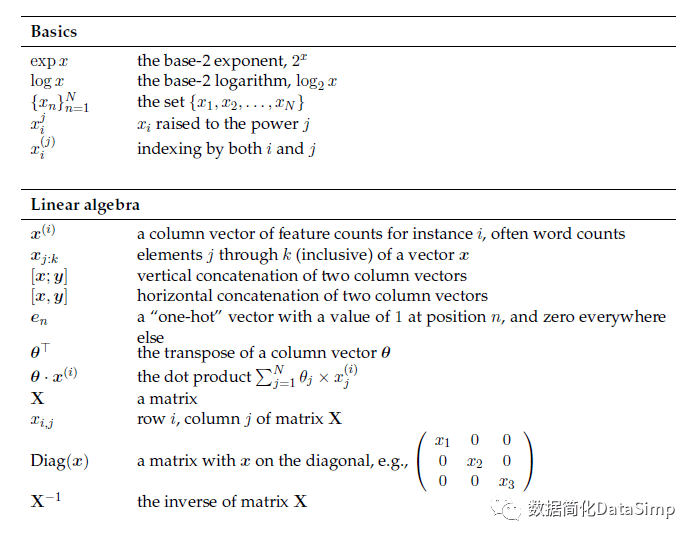

通常,单词,单词计数和其他类型的观察结果用罗马字母(a; b; c);参数用希腊字母(; ; )表示。对于随机变量x和参数,矢量用粗体表示。其他有用的符号在下表中指出。

Notation

As a general rule, words, word counts, and other types of observations areindicated with Roman letters (a; b; c); parameters are indicated with Greekletters (; ; ). Vectors are indicated with bold for both randomvariables x and parameters . Other useful notations are indicated in the tablebelow.

图2 :前言的注释( 后页Textdatasets 和Probabilities 和Machinelearning 略)

第一章介绍(Introduction)

第1页(PDF第19页)第一章介绍

第1章介绍

自然语言处理是使计算机可以访问人类语言的一组方法。在过去的十年中,自然语言处理已嵌入我们的日常生活中:自动机器翻译在网络和社交媒体中无处不在;文本分类可防止我们的电子邮件收件箱在大量垃圾邮件中崩溃;搜索引擎已经从字符串匹配和网络分析扩展到了高度的语言复杂性;对话系统提供了越来越普遍和有效的方式来获取和共享信息。

这些不同的应用程序基于一组通用的思想,并利用算法,语言学,逻辑,统计信息等。本文的目的是提供对这些基础的概述。技术乐趣将从下一章开始。本章的其余部分将自然语言处理与其他学科联系起来,确定当代自然语言处理中的一些高级主题,并为读者提供最佳途径。

1.1自然语言处理及其邻居

自然语言处理借鉴了许多其他的智力传统,从形式语言学到统计物理学。本节简要介绍一些与其最接近的邻居有关的自然语言处理。

1.2自然语言处理中的三个主题

自然语言处理涵盖了各种各样的任务,方法和语言现象。但是,尽管科学文章的 摘要(x 16.3.4)与西班牙语动词后缀模式的 标识(x 9.1.4)之间存在明显的不可通约性,但还是出现了一些通用主题。引言的其余部分集中在这些主题上,这些主题将通过文本以各种形式重复出现。每个主题都可以表示为关于如何处理自然语言的两种极端观点之间的对立。本文中讨论的方法通常可以放在这两个极端之间的连续体上。

Chapter 1

Introduction

Natural language processing is the set of methods for making humanlanguage accessible to computers. In the past decade, natural languageprocessing has become embedded in our daily lives: automatic machinetranslation is ubiquitous on the web and in social media; text classificationkeeps our email inboxes from collapsing under a deluge of spam; search engineshave moved beyond string matching and network analysis to a high degree oflinguistic sophistication; dialog systems provide an increasingly common and effectiveway to get and share information.

These diverse applications are based on a common set of ideas, drawing onalgorithms, linguistics, logic, statistics, and more. The goal of this text isto provide a survey of these foundations. The technical fun starts in the nextchapter; the rest of this current chapter situates natural language processingwith respect to other intellectual disciplines, identifies some high-levelthemes in contemporary natural language processing, and advises the reader onhow best to approach the subject.

1.1 Natural language processing and its neighbors

Natural language processing draws on many other intellectual traditions,from formal linguistics to statistical physics. This section briefly situatesnatural language processing with respect to some of its closest neighbors.

1.2 Three themes in natural language processing

Natural language processing covers a diverse range of tasks, methods, andlinguistic phenomena. But despite the apparent incommensurability between, say,the summarization of scientific articles (x 16.3.4) and the identification ofsuffix patterns in Spanish verbs (x 9.1.4), some general themes emerge. Theremainder of the introduction focuses on these themes, which will recur invarious forms through the text. Each theme can be expressed as an oppositionbetween two extreme viewpoints on how to process natural language. The methodsdiscussed in the text can usually be placed somewhere on the continuum betweenthese two extremes.

第一部分学习(Learning)

第11页(PDF第29页)第一部分学习

Part I

Learning

第13页(PDF第31页)第2章线形文本分类

第2章线性文本分类

我们从文本分类问题开始:给定文本文档,为其分配 离散标签y∈Y,其中Y是可能的标签集。文本分类有许多应用,从垃圾邮件过滤到电子病历分析。本章从数学的角度描述了一些最著名和最有效的文本分类算法,这些算法应有助于您理解它们的作用以及为什么起作用。文本分类也是更复杂的自然语言处理任务的基础。对于没有机器学习或统计学背景的读者来说,本章中的内容比后面的大部分内容都要花更多的时间来消化。但是,随着这些基本分类算法背后的数学原理在本书中的其他上下文中再次出现,这项投资将获得回报。

Chapter 2

Linear text classification

We begin with the problem of text classification: given a textdocument, assign it a discrete label y ∈Y, where Y is the set of possible labels. Text classification has manyapplications, from spam filtering to the analysis of electronic health records.This chapter describes some of the most well known and effective algorithms fortext classification, from a mathematical perspective that should help youunderstand what they do and why they work. Text classification is also abuilding block in more elaborate natural language processing tasks. For readerswithout a background in machine learning or statistics, the material in thischapter will take more time to digest than most of the subsequent chapters. Butthis investment will pay off as the mathematical principles behind these basicclassification algorithms reappear in other contexts throughout the book.

第47页(PDF第65页)第3章非线性分类

第3章非线性分类

线性分类似乎是我们自然语言处理所需要的。词袋表示法本质上是高维的,特征的数量通常大于标记的训练实例的数量。这意味着通常可以找到一个完全适合训练数据的线性分类器,甚至可以适合训练实例的任意标签!因此,转向非线性分类可能只会增加过度拟合的风险。此外,对于许多任务, 词汇特征(单词)在孤立的情况下是有意义的,并且可以提供有关实例标签的独立证据-与计算机视觉不同,计算机视觉中的单个像素很少提供信息,必须进行整体评估以使图像有意义。由于这些原因,自然语言处理历来集中于线性分类。

Chapter 3

Nonlinear classification

Linear classification may seem like all we need for natural languageprocessing. The bagof-words representation is inherently high dimensional, andthe number of features is often larger than the number of labeled traininginstances. This means that it is usually possible to find a linear classifierthat perfectly fits the training data, or even to fit any arbitrary labeling ofthe training instances! Moving to nonlinear classification may therefore onlyincrease the risk of overfitting. Furthermore, for many tasks, lexicalfeatures (words) are meaningful in isolation, and can offer independentevidence about the instance label — unlike computer vision, where individualpixels are rarely informative, and must be evaluated holistically to make senseof an image. For these reasons, natural language processing has historicallyfocused on linear classification.

第69页(PDF第87页)第4章分类的语言应用

第4章分类的语言应用

涵盖了几种分类技术之后,本章将重点从数学转移到语言应用。在本章的后面,我们将考虑文本分类中涉及的设计决策以及评估的最佳实践。

4.1情感与观点分析

文本分类的一种流行应用是自动确定诸如产品评论和社交媒体帖子之类的文档的 情感或 观点极性。例如,营销人员有兴趣知道人们如何响应广告,服务和产品 (Hu and Liu,2004);社会科学家关注情绪如何受天气等现象影响(Hannak等,2012),以及意见和情绪如何在社交网络上传播(Coviello等,2014;Miller等,2011)。在 数字人文领域,文学学者通过小说中的情感流来追踪情节结构 (Jockers,2015).1

Chapter 4

Linguistic applications of classification

Having covered several techniques for classification, this chapter shiftsthe focus from mathematics to linguistic applications. Later in the chapter, wewill consider the design decisionsinvolved in text classification, as well as best practices for evaluation.

4.1 Sentiment and opinion analysis

A popular application of text classification is to automatically determinethe sentiment or opinion polarity of documents such as productreviews and social media posts. For example, marketers are interested to knowhow people respond to advertisements, services, and products (Hu and Liu,2004); social scientists are interested in how emotions are affected by phenomenasuch as the weather (Hannak et al., 2012), and how both opinions and emotionsspread over social networks (Coviello et al., 2014; Miller et al., 2011). Inthe field of digital humanities, literary scholars track plot structuresthrough the flow of sentiment across a novel (Jockers, 2015). 1

第95页(PDF第113页)第5章无监督学习

第5章无监督学习

到目前为止,我们已经假设以下设置:

_一个 训练集,您可以在其中获得观测值 x和标签y;

_仅获得观测值x的 测试集。

没有标签数据,有可能学到什么吗?这种情况称为无监督学习,我们将看到,确实有可能了解无标签观测的基础结构。本章还将探讨一些相关的场景:半监督学习(其中仅标记了一些实例)和领域适应(其中培训数据与将部署受训系统的数据不同)。

Chapter 5

Learning without supervision

So far, we have assumed the following setup:

_ a training set where you get observations x and labels y;

_ a test set where you only get observations x.

Without labeled data, is it possible to learn anything? This scenario isknown as unsupervised learning, and we will see that indeed it ispossible to learn about the underlying structure of unlabeled observations.This chapter will also explore some related scenarios: semi-supervisedlearning, in which only some instances are labeled, and domainadaptation, in which the training data differs from the data on which thetrained system will be deployed.

第二部分序列和树(Sequencesand trees)

第123页(PDF第141页)第2部分

Part II

Sequences and trees

第125页(PDF第143页)第6章语言模型

第6章语言模型

在概率分类中,问题在于计算以文本为条件的标签的概率。现在让我们考虑一个反问题:计算文本本身的概率。具体来说,我们将考虑将概率分配给单词标记序列p(w 1 ; w 2 ; :::; w M ) 的模型,其中w m ∈V。集合V是一个离散词汇,

V = {土豚; 算盘; :::; 古筝}:[6.1]

Chapter 6

Language models

In probabilistic classification, the problem is to compute the probabilityof a label, conditioned on the text. Let’s now consider the inverse problem:computing the probability of text itself. Specifically, 原由网we will consider modelsthat assign probability to a sequence of word tokens, p(w1;w2; : : : ;wM), with wm ∈V. The set V is a discrete vocabulary,

V = {aardvark; abacus; : : : ; zither}: [6.1]

第145页(PDF第163页)第7章序列标记

第7章序列标记

序列标记的目的是将标签分配给单词,或更一般地,将离散标签分配给序列中的离散元素。序列标记在自然语言处理中有许多应用,第8章概述。目前,我们将重点关注 词性标记的经典问题,即需要按语法类别标记每个词。粗略的语法类别包括用于描述事物,属性或观念的名词,以及用于描述动作和事件的动词。考虑一个简单的输入:

(7.1)他们会钓鱼。

粗粒度词性标签的字典可能包括NOUN作为唯一有效的标签,但NOUN和VERB都是罐头和鱼类的潜在标签。准确的序列标记算法应在(7.1)中为can和fish选择动词标签,但应该为fish短语can中的相同两个单词选择名词。

Chapter 7

Sequence labeling

The goal of sequence labeling is to assign tags to words, or moregenerally, to assign discrete labels to discrete elements in a sequence. Thereare many applications of sequence labeling in natural language processing, andchapter 8 presents an overview. For now, we’ll focus on the classic problem of part-of-speechtagging, which requires tagging each word by its grammatical category.Coarse-grained grammatical categories include NOUNs, which describe things,properties, or ideas, and VERBs, which describe actions and events. Consider asimple input:

(7.1) They can fish.

A dictionary of coarse-grained part-of-speech tags might include NOUN as the only valid tag forthey, but both NOUN and VERB as potential tags for can and fish. A accurate sequence labeling algorithmshould select the verb tag for both can and fish in (7.1), but it should selectnoun for the same two words in the phrase can of fish.

第175页(PDF第193页)第8章序列标记的应用

第8章序列标记的应用

序列标记在整个自然语言处理中都有应用。本章重点介绍词性标记,词法语法属性标记,命名实体识别和标记化。它还简要介绍了交互式设置的两个应用程序:对话动作识别和语言之间的代码切换点的检测。

8.1词性标记

语言的语法是一组原则,根据这些原则,流利的说话者认为单词序列在语法上是可接受的。 词性(POS)是最基本的句法概念之一,它指的是句子中每个单词的句法作用。上一章中非正式地使用了这个概念,您可能会从自己的英语学习中获得一些直觉。例如,在句子“我们喜欢素食三明治”中,您可能已经知道我们和三明治是名词,例如动词,而素食主义者是形容词。这些标签取决于单词出现的上下文:在她的饮食中,她像素食主义者一样,是介词,而素食主义者是名词。

Chapter 8

Applications of sequence labeling

Sequence labeling has applications throughout natural language processing.This chapter focuses on part-of-speech tagging, morpho-syntactic attributetagging, named entity recognition, and tokenization. It also touches briefly ontwo applications to interactive settings: dialogue act recognition and thedetection of code-switching points between languages.

8.1 Part-of-speech tagging

The syntax of a language is the set of principles under whichsequences of words are judged to be grammatically acceptable by fluentspeakers. One of the most basic syntactic concepts is the part-of-speech (POS),which refers to the syntactic role of each word in a sentence. This concept wasused informally in the previous chapter, and you may have some intuitions fromyour own study of English. For example, in the sentence We like vegetariansandwiches, you may already know that we and sandwiches are nouns, like is averb, and vegetarian is an adjective. These labels depend on the context inwhich the word appears: in she eats like a vegetarian, the word like is apreposition, and the word vegetarian is a noun.

第191页(PDF第209页)第9章形式语言理论

第9章形式语言理论

现在我们已经看到了学习 标记单个单词,单词计数向量和单词序列的方法。我们将很快进行更复杂的结构转换。这些技术大多数都可以应用于来自任何离散词汇的计数或序列。例如,隐藏的马尔可夫模型根本就没有语言上的意义。这就提出了一个尚未被本文考虑的基本问题:什么是语言?

本章将从形式语言理论的角度出发,在 形式语言理论中,语言被定义为一组 字符串,每个字符串都是来自有限字母的一系列元素。对于有趣的语言,该语言中有无数个字符串,而没有语言中的无数字符串。例如:

Chapter 9

Formal language theory

We have now seen methods for learning to label individual words, vectorsof word counts, and sequences of words; we will soon proceed to more complexstructural transformations. Most of these techniques could apply to counts orsequences from any discrete vocabulary; there is nothing fundamentallylinguistic about, say, a hidden Markov model. This raises a basic question thatthis text has not yet considered: what is a language?

This chapter will take the perspective of formal language theory,in which a language is defined as a set of strings, each of which is asequence of elements from a finite alphabet. For interesting languages, thereare an infinite number of strings that are in the language, and an infinitenumber of strings that are not. For example:

第225页(PDF第243页)第10章上下文无关的解析

第10章上下文无关的解析

解析是确定字符串是否可以从给定的上下文无关文法派生的任务,如果可以,则如何确定。解析器的输出是一棵树,如图9.13所示。这样的树可以回答谁要做什么的基本问题,并可以应用于语义分析(第12和13章)和信息提取(第17章)等下游任务。

Chapter 10

Context-free parsing

Parsing is the task of determining whether a string can be derived from agiven contextfree grammar, and if so, how. A parser’s output is a tree, likethe ones shown in Figure 9.13. Such trees can answer basic questions ofwho-did-what-to-whom, and have applications in downstream tasks like semantic analysis(chapter 12 and 13) and information extraction (chapter 17).

第257页(PDF第275页)第11章依赖解析

第11章依赖解析

上一章讨论了用于根据嵌套组成部分(例如名词短语和动词短语)分析句子的算法。但是,短语结构分析中许多歧义性的主要来源都涉及 依存问题:在何处附加介词短语或补语从句,如何限定协调连词的范围等等。这些依附决定可以用更轻量级的结构来表示:句子中单词的有向图,称为 依存解析。句法注释已将其重点转移到了这种依存结构:在撰写本文时, 普遍依存(Universal Dependencies)项目为60多种语言提供了100多个依存树库。1本章将描述依存语法基础的语言思想,然后讨论确切的依存关系。和基于过渡的解析算法。本章还将讨论基于过渡的结构预测中 学习搜索的最新研究。

Chapter 11

Dependency parsing

The previous chapter discussed algorithms for analyzing sentences in termsof nested constituents, such as noun phrases and verb phrases. However, many ofthe key sources of ambiguity in phrase-structure analysis relate to questionsof attachment: where to attach a prepositional phrase or complementclause, how to scope a coordinating conjunction, and so on. These attachmentdecisions can be represented with a more lightweight structure: a directedgraph over the words in the sentence, known as a dependency parse.Syntactic annotation has shifted its focus to such dependency structures: atthe time of this writing, the Universal Dependencies project offers morethan 100 dependency treebanks for more than 60 languages. 1 This chapter will describe thelinguistic ideas underlying dependency grammar, and then discuss exact andtransition-based parsing algorithms. The chapter will also discuss recentresearch on learning to search in transition-based structure prediction.

第三部分意义(Meaning)

第283页(PDF第301页)第3部分意义

Part III

Meaning

第285页(PDF第303页)第12章逻辑语义

第12章逻辑语义

前几章重点介绍了通过标记和解析来重建自然语言 语法(其结构组织)的系统。但是,语言技术最令人兴奋和最有希望的潜在应用中,还涉及到从语法到 语义的超越,即文本的基本含义:

Chapter 12

Logical semantics

The previous few chapters have focused on building systems thatreconstruct the syntax of natural language — its structural organization— through tagging and parsing. But some of the most exciting and promisingpotential applications of language technology involve going beyond syntax to semantics—theunderlying meaning of the text:

第305页(PDF第323页)第13章谓词参数语义

第13章谓词参数语义

本章考虑了更多的“轻量级”语义表示,这些语义表示放弃了一阶逻辑的某些方面,但着重于谓词参数结构。让我们从一个简单的例子开始思考事件的语义:

(13.1)阿莎送给伯扬一本书。

Chapter 13

Predicate-argument semantics

This chapter considers more “lightweight” semantic representations, whichdiscard some aspects of first-order logic, but focus on predicate-argumentstructures. Let’s begin by thinking about the semantics of events, with asimple example:

(13.1) Asha gives Boyang a book.

第325页(PDF第343页)第14章分布式和分布式语义

第14章分布式和分布式语义

自然语言处理中反复出现的主题是从单词到含义的映射的复杂性。在第4章中,我们看到单个单词形式(例如bank)可以具有多种含义。相反,可以通过多种表面形式(一种称为 同义词的词汇语义关系 )来创建单一含义。尽管单词和含义之间存在这种复杂的映射关系,但是自然语言处理系统通常仍将单词作为分析的基本单元。在语义上尤其如此:前两章中的逻辑和框架语义方法依赖于手工制作的词典,这些词典从单词映射到语义谓词。但是,我们如何分析包含以前没有见过的单词的文本?本章介绍了通过分析未标记的数据来学习单词含义表示的方法,从而极大地提高了自然语言处理系统的通用性。使得从未标记数据中获取有意义的表示成为可能的理论是 分布假设。

Chapter 14

Distributional and distributed semantics

A recurring theme in natural language processing is the complexity of themapping from words to meaning. In chapter 4, we saw that a single word form,like bank, can have multiple meanings; conversely, a single meaning may becreated by multiple surface forms, a lexical semantic relationship known as synonymy.Despite this complex mapping between words and meaning, natural languageprocessing systems usually rely on words as the basic unit of analysis. This isespecially true in semantics: the logical and frame semantic methods from theprevious two chapters rely on hand-crafted lexicons that map from words tosemantic predicates. But how can we analyze texts that contain words that wehaven’t seen before? This chapter describes methods that learn representationsof word meaning by analyzing unlabeled data, vastly improving thegeneralizability of natural language processing systems. The theory that makesit possible to acquire meaningful representations from unlabeled data is the distributionalhypothesis.

第351页(PDF第369页)第15章参考解析度

第15章参考解析度

引用是语言歧义最明显的形式之一,不仅影响自动自然语言处理系统,还影响流利的人类读者。避免“歧义代词”的警告在有关写作风格的手册和教程中普遍存在。但是,指称歧义不只限于代词,如图15.1所示。每个带括号的子字符串指的是在本文前面介绍的实体。这些参考文献包括他和他的代词,也包括库克的简称,以及诸如公司和公司最大的增长市场之类的 名义上的词。

参考解析包含几个子任务。本章将重点讨论 共指解析,这是对引用单个基础实体或某些情况下单个事件的文本跨度进行分组的任务:例如,蒂姆 库克他跨度,他和库克都是 共指。这些单独的跨度称为 提及,因为它们提及实体。该实体有时称为 参考。每个提及都有一组 前提,这些前提是前面提到的相互关联的;对于实体的第一次提及,先行集合为空。 解决代词照应的任务只需要识别代词的先行词。在 实体链接中,引用不解析为其他文本范围,而是解析为知识库中的实体。第 17章讨论了此任务。

Chapter 15

Reference Resolution

References are one of the most noticeable forms of linguistic ambiguity,afflicting not just automated natural language processing systems, but alsofluent human readers. Warnings to avoid “ambiguous pronouns” are ubiquitous inmanuals and tutorials on writing style. But referential ambiguity is notlimited to pronouns, as shown in the text in Figure 15.1. Each of the bracketedsubstrings refers to an entity that is introduced earlier in the passage. Thesereferences include the pronouns he and his, but also the shortened name Cook,and nominals such as the firm and the firm’s biggest growth market.

Reference resolution subsumes several subtasks. This chapter will focus on coreferenceresolution, which is the task of grouping spans of text that refer to a singleunderlying entity, or, in some cases, a single event: for example, the spansTim Cook, he, and Cook are all coreferent. These individual spans arecalled mentions, because they mention an entity; the entity is sometimescalled the referent. Each mention has a set of antecedents, whichare preceding mentions that are coreferent; for the first mention of an entity,the antecedent set is empty. The task of pronominal anaphora resolution requiresidentifying only the antecedents of pronouns. In entity linking,references are resolved not to other spans of text, but to entities in aknowledge base. This task is discussed in chapter 17.

第379页(PDF第397页)第16章话语

第16章话语

自然语言处理的应用通常涉及多句文档:从长篇幅的餐厅评论到500字报纸文章到500页小说。到目前为止,我们讨论的大多数方法都与单个句子有关。本章讨论用于处理多句子语言现象(统称为 话语)的理论和方法。话语结构具有多种特征,没有一种结构适合每种计算应用。本章涵盖一些研究最深入的话语表示,同时重点介绍用于识别和利用这些结构的计算模型。

Chapter 16

Discourse

Applications of natural language processing often concern multi-sentencedocuments: from paragraph-long restaurant reviews, to 500-word newspaperarticles, to 500-page novels. Yet most of the methods that we have discussedthus far are concerned with individual sentences. This chapter discussestheories and methods for handling multisentence linguistic phenomena, knowncollectively as discourse. There are diverse characterizations ofdiscourse structure, and no single structure is ideal for every computationalapplication. This chapter covers some of the most well studied discourserepresentations, while highlighting computational models for identifying andexploiting these structures.

第四部分应用(Applications)

第401页(PDF第419页)第4部分应用

Part IV

Applications

第403页(PDF第421页)第17章信息提取

第17章信息提取

计算机提供了强大的功能来搜索和推理结构化记录和关系数据。一些人认为,人工智能最重要的局限性不是推理或学习,而仅仅是知识太少(Lenat等,1990)。自然语言处理提供了一种有吸引力的解决方案:通过阅读自然语言文本自动构建结构化的 知识库。

例如,许多Wikipedia页面都有一个“信息框”,该信息框提供有关实体或事件的结构化信息。图17.1a中显示了一个示例:每一行代表一个实体或一个或多个实体的属性,它们位于唱片上的海上飞机上。属性集由预定义的 架构确定,该架构适用于 Wikipedia中的所有唱片集。如图17.1b所示,许多字段的值直接在同一Wikipedia页面上的文本的前几个句子中指出。

从文本自动构造(或“填充”)信息框的任务是 信息提取的一个示例。许多信息提取可以用 实体, 关系和 事件来描述。

Chapter 17

Information extraction

Computers offer powerful capabilities for searching and reasoning aboutstructured records and relational data. Some have argued that the mostimportant limitation of artificial intelligence is not inference or learning,but simply having too little knowledge (Lenat et al., 1990). Natural languageprocessing provides an appealing solution: automatically construct a structured knowledge base by reading natural language text.

For example, many Wikipedia pages have an “infobox” that providesstructured information about an entity or event. An example is shown in Figure17.1a: each row represents one or more properties of the entity IN THE AEROPLANE OVER THE SEA, a record album. The set ofproperties is determined by a predefined schema, which applies to allrecord albums inWikipedia. As shown in Figure 17.1b, the values for many ofthese fields are indicated directly in the first few sentences of text on thesameWikipedia page.

The task of automatically constructing (or “populating”) an infobox fromtext is an example of information extraction. Much of informationextraction can be described in terms of entities, relations, and events.

第431页(PDF第449页)第18章机器翻译

第18章机器翻译

机器翻译(MT)是人工智能中的“圣杯”问题之一,它具有通过促进世界各地人们之间的交流而改变社会的潜力。因此,自1950年代初以来,机器翻译就受到了广泛的关注和资助。然而,事实证明,这非常具有挑战性,尽管在可用的MT系统方面已取得了重大进展,尤其是对于英语-法语等高资源语言对而言,但我们离与人类翻译的细微差别和深度相称的翻译系统相去甚远。

Chapter 18

Machine translation

Machine translation (MT) is one of the “holy grail” problems in artificialintelligence, with the potential to transform society by facilitatingcommunication between people anywhere in the world. As a result, MT hasreceived significant attention and funding since the early 1950s. However, ithas proved remarkably challenging, and while there has been substantialprogress towards usable MT systems — especially for high-resource languagepairs like English-French — we are still far from translation systems thatmatch the nuance and depth of human translations.

第457页(PDF第475页)第19章文字产生

第19章文字产生

在自然语言处理中许多最有趣的问题中,语言就是输出。上一章描述了机器翻译的具体情况,但是还有许多其他应用程序,从研究文章的概述到自动化新闻,再到对话系统。本章重点介绍三种主要方案:数据到文本,其中生成文本以解释或描述结构化记录或非结构化感知输入;文本到文本,通常涉及将来自多种语言来源的信息融合到一个连贯的摘要中;和对话,其中文本是与一个或多个人类参与者进行互动对话的一部分。

Chapter 19

Text generation

In many of the most interesting problems in natural language processing,language is the output. The previous chapter described the specific case ofmachine translation, but there are many other applications, from summarizationof research articles, to automated journalism, to dialogue systems. Thischapter emphasizes three main scenarios: data-totext, in which text isgenerated to explain or describe a structured record or unstructured perceptualinput; text-to-text, which typically involves fusing information from multiplelinguistic sources into a single coherent summary; and dialogue, in which textis generated as part of an interactive conversation with one or more humanparticipants.

第475页(PDF第493页)附录A 概率

附录A 概率

概率论为推理随机事件提供了一种方法。通常用于解释概率论的随机事件包括硬币翻转,抽奖和天气。像选择硬币一样思考单词的选择似乎有些奇怪,特别是如果您是认真选择单词的人。但是无论是否随机,事实证明很难对语言进行确定性建模。概率为建模和处理语言数据提供了强大的工具。

Appendix A

Probability

Probability theory provides a way to reason about random events. The sortsof random events that are typically used to explain probability theory includecoin flips, card draws, and the weather. It may seem odd to think about thechoice of a word as akin to the flip of a coin, particularly if you are thetype of person to choose words carefully. But random or not, language hasproven to be extremely difficult to model deterministically. Probability offersa powerful tool for modeling and manipulating linguistic data.

第485页(PDF第503页)附录B 数值优化

附录B 数值优化

无限制的数值优化涉及最小x2RD形式的问题的求解

Appendix B

Numerical optimization

Unconstrained numerical optimization involves solving problems of theform, min x2RD

第489页(PDF第507页)参考书目

参考书目

Bibliography

(1992).

(1996).

(1997).

(1998).

…

…

(2017).

(2018).

(2018).

(2018).

Abadi, M., A. Agarwal, P. Barham, E. Brevdo, Z. Chen, C. Citro, G. S.Corrado, A. Davis, J. Dean, M. Devin, S. Ghemawat, I. J. Goodfellow, A. Harp,G. Irving, M. Isard, Y. Jia, R. J ozefowicz, L. Kaiser, M. Kudlur, J.Levenberg, D. Mane, R. Monga, S. Moore, D. G. Murray, C. Olah, M. Schuster, J.Shlens, B. Steiner, I. Sutskever, K. Talwar, P. A. Tucker, V. Vanhoucke, V.Vasudevan, F. B. Viegas, O. Vinyals, P. Warden, M. Wattenberg, M. Wicke, Y.Yu, and X. Zheng (2016). Tensorflow: Large-scale machine learning onheterogeneous distributed systems. CoRR abs/1603.04467.

Abend, O. and A. Rappoport (2017). The state of the art in semanticrepresentation. See acl (2017).

Abney, S., R. E. Schapire, and Y. Singer (1999). Boosting applied totagging and PP attachment. See emn (1999), pp. 132–134.

…

…

Zhu, X. and A. B. Goldberg (2009). Introduction to semi-supervisedlearning. Synthesis lectures on artificial intelligence and machine learning3(1), 1–130.

Zipf, G. K. (1949). Human behavior and the principle of least effort.Addison-Wesley.

Zirn, C., M. Niepert, H. Stuckenschmidt, and M. Strube (2011).Fine-grained sentiment analysis with structural features. See ijc (2011), pp.336–344.

Zou, W. Y., R. Socher, D. Cer, and C. D. Manning (2013). Bilingual wordembeddings for phrase-based machine translation. See emn (2013), pp. 1393–1398.

第557页(PDF第575页)索引

索引

Index

_-conversion, 295

_-reduction, _-conversion, 293

n-gram, 24

language model, 198

p-value, 84

…

…

BIBLIOGRAPHY 569

yield, 209

Zipf’s law, 143

Under contract with MIT Press, shared under CC-BY-NC-ND license.

第569页(PDF第587页),全部PDF结束。

雅各布爱森斯坦(Jacob Eisenstein)博士简历

秦陇纪,科学Sciences20191212Thu

A.雅各布爱森斯坦(JacobEisenstein)中文简历

我从事计算语言学的研究,主要研究非标准语言,话语,计算社会科学和机器学习。我于2019年7月加入谷歌人工智能(Google AI),担任研究科学家。从2012年到2019年,我在佐治亚理工大学任教,领导了计算语言学实验室。

麻省理工学院出版社将于2019年10月发布《自然语言处理入门》(pdf版)

出版物|推特|传记素描|github |简历

研究

我的研究将机器学习和语言学结合起来,构建了自然语言处理系统,该系统可根据上下文变化而变化,并提供有关社会现象的新见解。您可以在此处,Google学术搜索和语义学术搜索中找到出版物的完整列表。这是一些最近的论文:

测量和建模语言变化。爱森斯坦。NAACL 2019 和IC2S2 教程。

上下文化嵌入的无监督域自适应:以早期现代英语为例的案例研究。汉和爱森斯坦。已被EMNLP 2019 接受。

我们如何用词做事:将文本作为社会和文化数据进行分析。Nguyen ,Liakata ,DeDeo ,Eisenstein ,Mimno ,Tromble ,Winters 。预印本。

参考读者:用于回指解析的循环实体网络。Liu ,Zettlemoyer 和Eisenstein 。ACL 2019 。

使“ 获取” 发生:社会和语言环境对词汇创新成功的影响。斯图尔特和爱森斯坦。EMNLP ,2018 年。

注意您的POV :文章和编辑趋同于Wikipedia 的中立性规范。Pavalanathan ,Han 和Eisenstein 。CSCW ,2018 年。

在线论坛中的互动式学习。基斯林,帕瓦拉纳坦,费兹帕特里克,汉和爱森斯坦。计算语言学,2018 年9 月。

使用全局图属性预测语义关系。品特和爱森斯坦。EMNLP 2018 。

克服情感分析中的语言变化并引起社会关注。杨和爱森斯坦。ACL 的交易,2017 年。

教学

2018年春季:CS 4650/7650,自然语言处理

2017年秋季:CS 8803-CSS,计算社会科学

2017年春季:CS 4650/7650,自然语言处理

2016年春季:CS4464 / 6465,计算新闻学

2015年秋季:CS 4650/7650,自然语言处理

2015年春季:CS 8803-CSS,计算社会科学

2014年秋季:CS 4650/7650自然语言处理

2014年春季:CS 8803,计算社会科学

2013年秋季:CS 4650/7650,自然语言处理

2013年春季:CS 4650/7650,自然语言处理

2012年春季:CS 4650/7650,自然语言处理

忠告

(从最新开始)

博士生:Umashanthi Pavalanathan(Twitter),Yi Yang(Asapp),Yangfeng Ji(弗吉尼亚大学)

在读博士生:Sandeep Soni,Ian Stewart,Yuval Pinter,Sarah Wiegreffe

专业服务

共同Zhu席:2016年EMNLP NLP和计算社会科学研讨会。

共同Zhu席:2014年ACL语言技术与计算社会科学研讨会

共同Zhu席:2013-2015年亚特兰大计算社会科学研讨会

高级区Zhu席:ACL 2019

区域Zhu席:NAACL 2018,EMNLP 2017,NAACL 2016,ACL 2014,EACL 2013

教程联席Zhu席:ACL 2018,NAACL 2012

学生研究工作坊,奖项和/或志愿者的协调员/Zhu席:NAACL 2016,ICML 2013,NAACL 2013

计划委员会:ACL,CONLL,EMNLP,NAACL,ICML,NIPS,CSCW,各种研讨会。所有这些场所都是开放式(OA)。

编辑委员会:TACL(OA)的动作编辑,语言技术中的语言问题(OA),语言科学出版社(OA)的语言变化系列,计算语言学(OA)

期刊审查:ACM通讯,计算语言学(OA),机器学习研究期刊(OA),人工智能研究(OA),机器学习期刊,计算语言学协会(OA)交易,社会语言学期刊,《美国统计协会杂志》,《社会语言》,《格洛萨(OA)》,《美国国家科学院院刊》,《人文科学数字奖学金》,《自然人类行为》...

我更喜欢我的审查工作集中在每个人都可以阅读的论文上。因此,除非我自己在那儿提交论文,否则我通常不会审查非OA的场所。...

B.雅各布爱森斯坦(JacobEisenstein)英文简历

|

Jacob Eisenstein Introduction to Natural Language Processingis forthcoming in October 2019 with MIT press (draft pdf) I work on computational linguistics, focusing on non-standard language, discourse, computational social science, and machine learning. In July 2019, I joined Google AI as a research scientist. From 2012 to 2019, I was on the faculty at Georgia Tech, where I led the Computational Linguistics lab. publications | twitter | biographical sketch | github | cv |

|

Research My research combines machine learning and linguistics to build natural language processing systems that are robust to contextual variation and offer new insights about social phenomena. A complete list of publications can be found here, on Google scholar, and on Semantic scholar. Here are some recent papers:

Teaching

Advising (starting with the most recent)

Professional service

|

-----------------------------

(注:相关素材[1-x]图文版权归原作者所有。)

素材(5b字)

1. JacobEisenstein. jacobeisenstein/ gt-nlp-class.[EB/OL], github,https://github.com/jacobeisenstein/gt-nlp-class/tree/master/notes, visit date: 2019-12-12-Thu

2. JacobEisenstein. Jacob Eisenstein. [EB/OL], https://jacobeisenstein.github.io/,visit date: 2019-12-12-Thu

x. 秦陇纪. 西方哲学与人工智能、计算机; 人工智能编年史; 人工智能达特茅斯夏季研究项目提案(1www.58yuanyou.com955 年8 月31 日) 中英对照版; 人工智能研究现状及教育应用; 计算机操作系统的演进、谱系和产品发展史; 数据科学与大数据技术专业概论; 数据资源概论; 文本数据溯源与简化; 大数据简化技术体系; 数据简化社区概述. [EB/OL], 数据简化DataSimp( 微信公众号), https://dsc.datasimp.org/, http://www.datasimp.org, 2017-06-06.

—END—

免责说明:公开媒体 素材出处 可溯源。本号 不持有任何倾向性,亦 不表示认可其观点或其所述。

秦农跋

科学建立在数学和现实数据基础之上。 科学有其范围并非万能,但人们仍用其扩大认知。人类认知的高级阶段是在道德、数学、逻辑、哲学、数据等思维层次,对初级的感觉、情绪、外表、印象、语言、记忆等自然社会现象认知,做出更为深刻、理性、智慧和长久的判断和总结。高级认知对错交织但形态稳定,主要存在于宗教、艺术、科学、技术等领域。若 没有数学工具支撑的科学认知,仅用语言来总结自然社会现象,拿书本文字代替实验设计、工程实践,将止步于宽泛又肤浅的语言思维型道理。

数学认知和数据技术随处可见,仅靠文化教育和专业工作者是不够的。 借助数据相关的数学和科学、算法和程序、资源和简化、机构和活动、政策和新闻,“数据简化DataSimp”公号旨在帮助大众从思维方法上接近数据殿堂。数据简化DataSimp 公号不持有任何倾向性,只提供大家的学术观点;倡导"理性之思想,自主之精神",专注于学者、学术、学界的发展进步,不定期向您推荐人类优秀学者及其文章;欢迎大家分享、贡献和赞赏、支持科普~

数据简化社区语言处理专辑44篇:

“ 数据简化DataSimp” 数据技术科普 |

下载PDF 后赞赏支持 |